I’m using it as a coding assistant. It’s actually written most of the code so far. I’m using Visual Studio Code with Github Copilot (with a Pro trial) with the Claude Sonnet 4.5 model (pretty new) in agent mode (which means it can update code in files directly and use/read the terminal with approval).

This is how it started with a lot of iteration after that.

Start

#new I want to create a new project called FluidTouch that uses LVGL with a Elecrow CrowPanel 7 inch display

I’ll create a new LVGL project workspace for your FluidTouch project with the Elecrow CrowPanel 7-inch display.

Generating plan to create a new workspace

Now I’ll set up the FluidTouch project workspace systematically. Let me start by creating the instruction file and tracking progress.

Created 6 todos

Created copilot-instructions.md

Starting (2/6) Scaffold LVGL project structure

Created platformio.ini

Created main.cpp

Created lv_conf.h

Created User_Setup.h

Created README.md

Starting (5/6) Install required extensions

PlatformIO IDE extension is already installed

Completed (5/6) Install required extensions

Perfect! Your FluidTouch project is now set up and ready for development!

What’s Been Created

Project Structure

Project Structure

Features Included

Features Included

- LVGL v8.3 graphics library

- TFT_eSPI display driver for 800x480 resolution

- Touch input support

- Sample UI with label and button

- PSRAM support enabled

Extensions

Extensions

- PlatformIO IDE (already installed)

Next Steps

- Build the project: Click the PlatformIO icon in the sidebar, then click “Build”

- Connect your CrowPanel: Plug in your Elecrow CrowPanel 7" display via USB

- Upload: Click “Upload” in PlatformIO to flash the firmware

- Monitor: Use Serial Monitor to see debug output

Important Notes

Pin Configuration: The pin assignments in

Pin Configuration: The pin assignments in User_Setup.h are typical for CrowPanel, but verify them against your specific model’s documentation.

Touch Calibration: You may need to adjust the touch calibration values in

Touch Calibration: You may need to adjust the touch calibration values in main.cpp for accurate touch input.

Display Driver: If the display doesn’t work, try uncommenting the

Display Driver: If the display doesn’t work, try uncommenting the ST7796_DRIVER line in User_Setup.h instead of ILI9488_DRIVER.

The project is ready to build and deploy to your Elecrow CrowPanel 7" display!

It got a bunch of things wrong that we chatted through but it wasn’t a terrible start. Sometimes it started going in circles at which point I just fixed it myself. At one point, I pointed it at an Elecrow example project which it was able to parse all the pin mappings from. Sometimes it was easier for me to fix myself so I did.

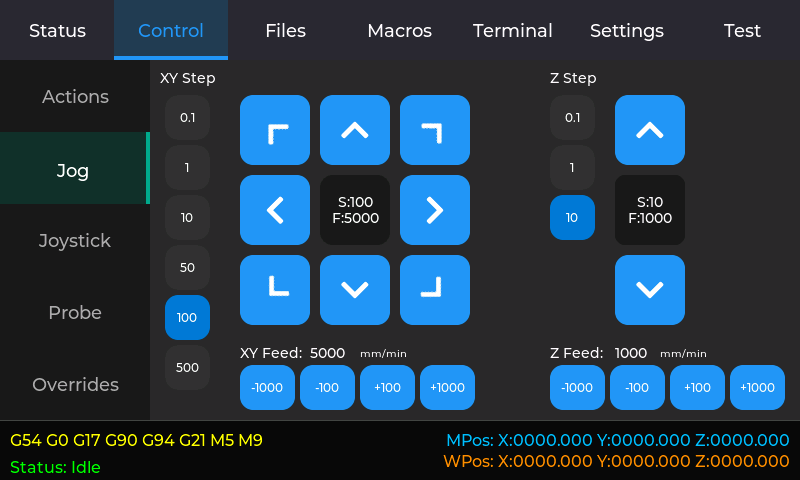

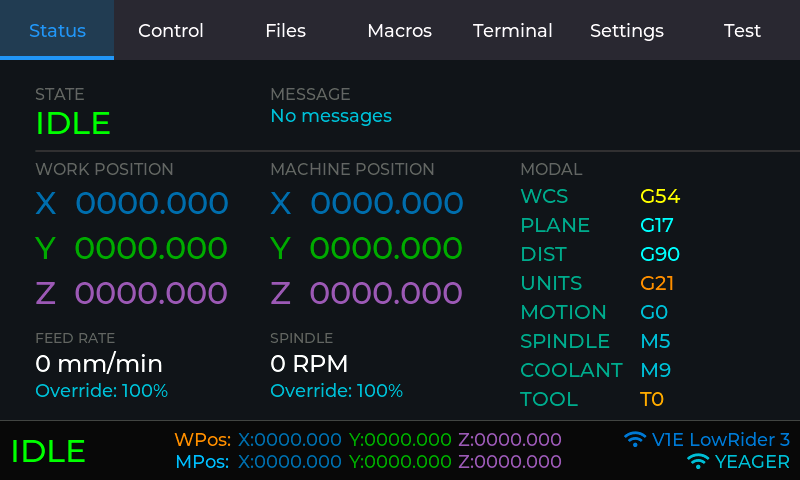

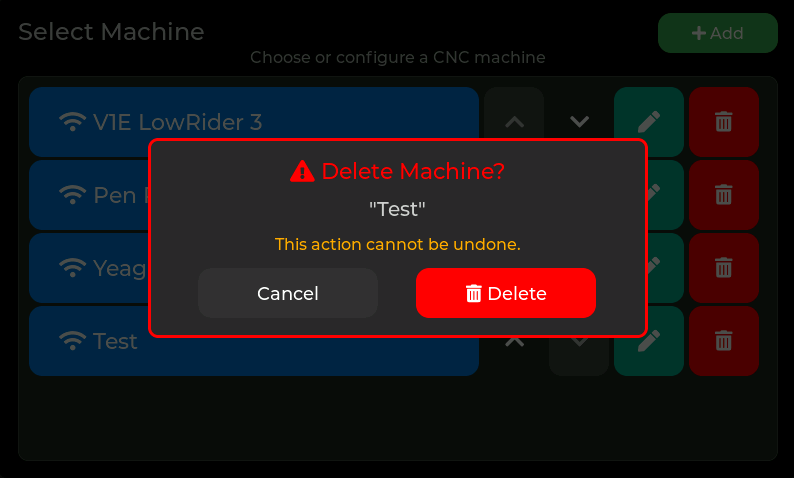

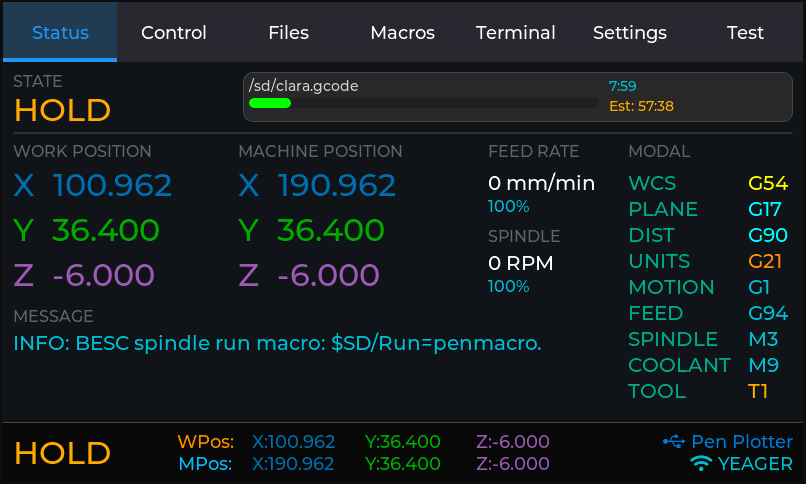

Once I got to using LVGL to actually try and create a UI, it did quite well. It was interesting how it would remember context. For example, I gave it the $SS output from my pen plotter to show as the example text for the terminal. Later, I told it to create a grid of buttons for the macro tab with reasonable names. It picked up out of the terminal output that it was a pen plotter, so it created Pen Up and Pen Down macros.

For the Files tab, I told it that I wanted a list of gcode files with a play button to run each file and a drop down to select Flash or SD. At first, the alignment of the filenames was wrong so I told it, and it fixed it in one try.

It’s pretty wild. While I don’t think you can just “vibe code” your way to a quality solution, with a bit of development background, it’s a useful tool.

I should probably talk with some humans for a bit now.