So can u overcome the reconnect issue if that persists?

This isn’t going to be a problem.

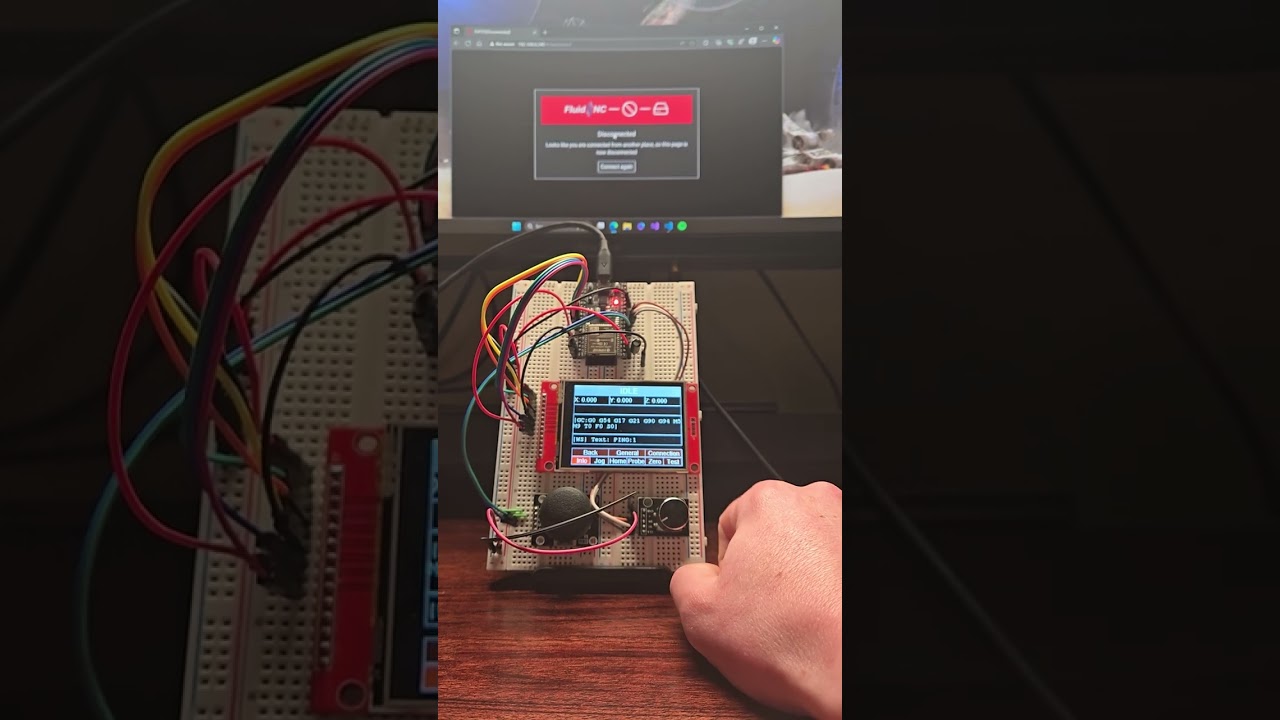

I don’t know how it would behave with WebUI v2 since that already has issues with multiple connections. However, for WebUI v3, as long as I use the same websocket port that the WebUI uses (default is 82 instead of normal 81), it will automatically disconnect the WebUI page when my device connects just like if you opened another instance. When you reload the WebUI page, it automatically disconnects my device. I just had to tell it not to automatically reconnect, otherwise it would continuously just keep disconnecting you from the WebUI page. So, I’ll just need to provide a button or something to reconnect.

Lots of stuff to sort out with this but getting there slowly. For some reason, after homing is complete, it throws it into alarm. I haven’t gotten around to debugging that one. I’ve been switching around some libraries and trying to get ideas for a UI, specifically a menu, that is not messy code. It won’t have all those menu items, just getting ideas. This is a way different kind of development but it’s interesting. I want something simple and intuitive.

You are a genius! inspiring! All i want is a joystick, you went and added an upstairs with a kitchen sinks and spare bedroom ![]()

Lol, definitely not a genius in this arena. Just have enough related knowledge to be able to figure things out. It really doesn’t do much at the moment other than display stuff.

That is super cool. With the websocket, you could pair some wifi connected devices with bluetooth radios to available bluetooth gamepad controllers. I hooked up a wii mote to my maslow once and that was all possible because of the websocket connection. Your work is opening up many possibilities.

Ok ok, now your talking

Wow, i love it!

This is really, really cool. ![]()

So i have a few esp32s. I would need a display, joy stick, and selector? What are you using for code?

I wouldn’t go buy anything yet. It’s going to be awhile until it’s ready for sharing. Once the code is done, I probably need to sort out some kind of PCB (which I’ve never done). And make a case for it.

But this is what I’m using currently. Some of that might change a bit for a PCB.

ESP32 - same as Jackpot

Display - Amazon.com

Rotary Encoder - Amazon.com

Joystick - Amazon.com

For putting on a breadboard, I had to desolder the angled pin headers on the encoder and joystick and replace with straight pin headers.

There’s also a 10 uF capacitor but that’s only so I don’t have to press the boot button when flashing.

This is C++ code using PlatformIO. So, flashing it is just like if you were flashing FluidNC from source.

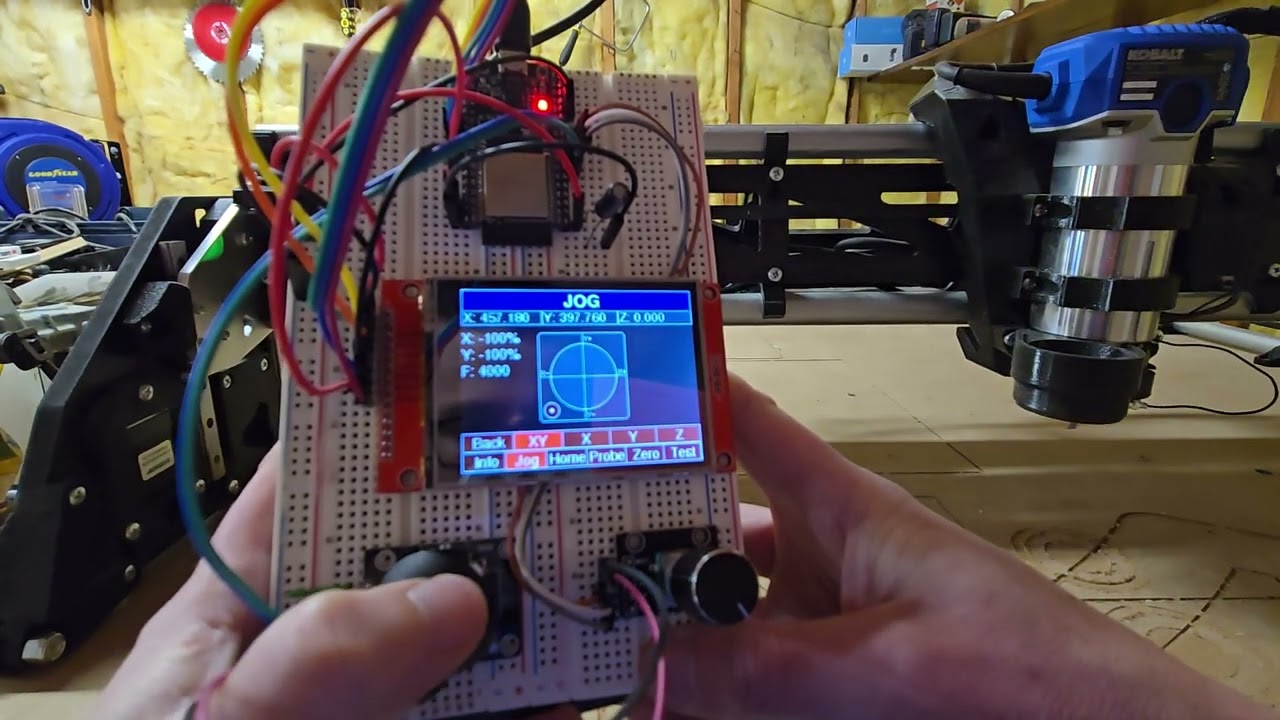

I’ve got some UI stuff to sort out but this is really starting to come together. I changed the rotary encoder library and the menu feels so much smoother. I also got auto-reporting working which I couldn’t figure out before. I tested out displaying a hold message and resuming. I implemented homing all, X, Y, Z. It’s pretty wild how a little screen, a joystick, and a rotary encoder can make an efficient interface.

Just wanna put it out there, you are only a couple features away from a full pendant, that looks to be a bit less expensive and has a much larger screen.

Yea, not quite sure how I got from messing with websockets to having a full pendant. ![]()

The main thing I would need to add is being able to select a file and run from SD.

This is actually the same size (and I think the same display) as the CYD version of the pendant. Technically, I might be able to use that but I like having the ESP32 separate. Also, not sure if there are enough pins to add what I need.

At some point, I’m probably going to need some help getting this thing off a breadboard but I haven’t gotten there yet.

If you run just short, you can try the ESP32-S3. It has a few more.

That’s what runs the M5 Dial, as well as the 7.0" Elecrow screen they are working on an interface for.

I have enough pins for what I’m using now. That comment was specific to trying to use one of those CYD boards with the ESP32 and display combined.

There’s this weird line I’m trying not to cross. Having a display this small and with limited fancyness makes manually dealing with the UI by drawing text and shapes practical. I looked at lvgl but dropped it after getting lost in the details.

I’ve decided this is not going to be a full pendant. It started going in that direction but that’s more effort than I want to put into this right now. I think to do that, I’ll need to try out using LVGL. Otherwise, handling selecting a file is going to be a pain. Also, there’s just limited space for the menu without implementing horizontal scrolling. But I like everything being visible. It makes it more intuitive.

I did buy one of the CYD screen/ESP32 combos to try out. If I can figure out how to connect both a joystick and rotary encoder to it, it would simplify wiring. Otherwise, I need some kind of PCB or protoboard. If it doesn’t work out, I’ll still use it for trying out LVGL.

As far as functionality, it got me thinking about my workflow and what I really want it to do. It normally looks like this.

- Upload a gcode file via the WebUI running in browser on my computer (which is in the house away from the LR3).

- Home the machine via the WebUI on my phone.

- Jog the machine to the starting position via the WebUI on my phone.

- Run the gcode file via the WebUI from my phone. WebUI on my phone is also used to resume from a hold.

I never have a reason to probe or zero from the WebUI. This is handled in the gcode files.

My original goal was to implement the functionality of my joystick jogging and hold monitor extensions and I have something equivalent.

So, where am I going from here?

I’m working on something to implement macros using the gcode parameters and expressions functionality. Reading a file from the websocket is possible but more complicated than what I’m doing now. I just don’t want to do that? So, I’m trying to define a single gcode file that defines the macros and provides metadata about them. With a variable set to 0, it outputs messages I can parse with the metadata (index, name, description). With a value greater than one, it executes that macro code. The plan for being able to run a file is to have one of those macros run a specific gcode file that runs the current file. I’ll just update that gcode file when adding the gcode I want to run. Or I can still just start it from the WebUI.

So, future workflow with this would be:

- Upload a gcode file via the WebUI running in browser on my computer (which is in the house away from the LR3).

- Update gcode file via the WebUI on my computer that defines the file used by the run macro.

- Home the machine using this device.

- Jog the machine to the starting position using this device.

- Run the gcode file via the run macro using this device. This device is also used to resume from a hold.

I like that.

Neat project/experiments. Curious if you have an iPhone or Android (with USB-C connector) ?