It is tracking features from image to image. Then it computes the rigid transform to create an estimate of the location and orientation of the camera at every photo. It also needs to predict the camera distortion model. The interesting thing is, it cannot determine the scale. Images of a model of a barn from 60cm away look the same as images of a real barn from 60m away.

You are right. The features in the background doesn’t impact the model, but is used to determine “where” the picture was taken, in relation to the model - to create the 3d workspaces the model is generated within.

ah. interesting. I didn’t realize they were taking advantage of the background during the process. The one I played around with a while back didn’t care about the background. I used a lazy susan and bright lighting to get the best results.

I thought about that, but then thought that perhaps the stitching algorithm might want/need to take advantage of either background or directed lighting for shape and feature cues, not to mention if it’s also trying to do any texturing. A moving shadow line could confuse it. And having no shadows might make it difficult to determine shape.

You want no shadows, but lots of unique texture. The axe head should be pretty easy to track, since it has very little reflection a lots of texture. Indirect, diffuse lighting is the best, because it lets you see the texture well, and doesn’t add its own features.

For the record, I have never used meshroom, but I have developed 3D from video and other similar software for computer vision and perception. So they may be doing something completely different.

Easter quiz:

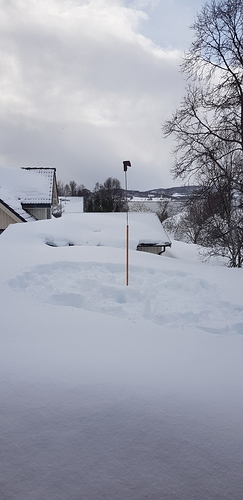

What is happening in this picture?

A) heathen Easter ritual for people close to the north pole?

B) warning totem to neighbors to stay away during lockdown?

C) super DIY Meshroom photo setup?

Four!

Northern Golf?

Always wondered if golfers up north used white balls when playing in the snow…

My thought is the ball needs to be neon pink…

Bright orange I think.

I’ve ended up with asking on reddit. We didn’t figure it out, having danced around the heathen totem many times, taking hundreds of hundreds of images. Meshroom is not as automatic as it claimed.

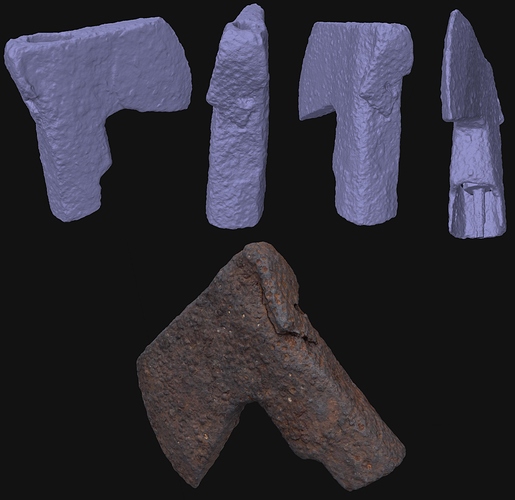

Some random person on reddit heard our cries for help. He/she took the time to mask all images, and make a model in Agisoft Metashape. Look at this, it’s amazing!

ah. the power of reddit

It is quite the super computer, just a little hard to coerce.

not really. The magic words are, “I’ve tried this all day and have decided this can’t be done”.

Too many cocky people on reddit that would take that as an immediate triple dog dare

Well - the person that helped me has not been cocky at all - just pouring out tips and advices for photogrammetry, in addition to making the model. I’m happy to meet such nice people out there, randomly. There is hope for humanity. Anyways - here is the result! (didn’t care for precise size for first print try. Will try to scale it properly next print)

That is very cool.

Thanks! Next step is borrowing a viking axe from the 1300 hundreds, that my father in law very much wants to make a replica off. The museum curators doesn’t let him bring it to his forge. If I could capture it with photogrammetry in safe conditions, I could print copies that he can throw around in his smithy without any worries.