I discovered the robot acts drunk when the battery is low. I didn’t plug it in overnight and when I told it to go somewhere in the morning it went in the general direction and then plowed into a box.

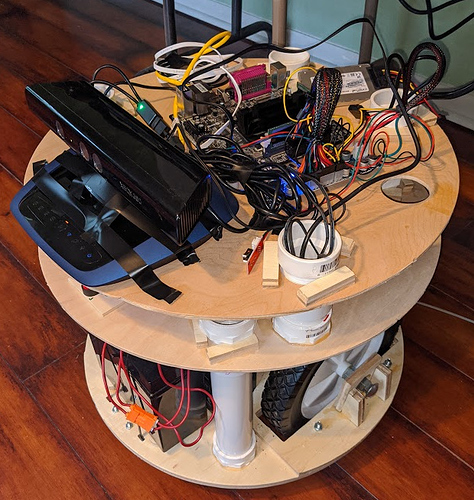

Here’s a recent photo (forgive the electrical tape… its temporary).

The laser scanner is on the second level with all the power converters and terminal strips (you can’t see it). I want to redo the support pipes there to minimize their cross-section and reduce the amount of the laserscan that’s lost. I like the laser scanner being there since its relatively protected from 8-year old humans and 1-year old dogs.

I repurposed an old dd-wrt router and configured in client mode to function as a local ethernet switch/wifi bridge. Now, the mapper, computer, and soon-to-be-installed jetson nano will all talk via ethernet.

I’m working on getting my lowrider back working (finally getting around to putting the new belt holders on) so I can cut a new platform to relocate the kinect and install the jetson and cameras.