The resolution of the camera is about 7 pixels per mm, so not quite 0.1 mm resolution. The webcam is at 640x480 and the webcam (Logitech C170) has 1024x768 available, but there were issues with the video getting laggy, which I didn’t want to have to worry about. On a separate project I had used a Raspberry Pi camera and adjusted the focus by rotating the lens, and it can focus very close for a resolution of roughly 0.004 mm. At higher resolutions the camera will generally be closer to the workpiece and you might have to worry about collisions depending on your depth of cut, unless you have a removable camera mount.

Right now the plugin pulls the image from the OctoPi webcam “/webcam/?action=snapshot” (just like the timelapse), but nothing prevents it from pulling from a different server, so you could mount a separate webcam-only server on the tool head, and then you would have lots of freedom to not have to run long ribbon cables or USB extensions or whatever.

I think there are a lot of possible applications for a camera on the tool head broadly, like optical homing, and maybe with a laser at a shallow angle you can home Z. Finding the edge of the workpiece might not have good contrast in general, and focus might not be great depending on z height, but with a couple laser line generators that should not be an issue and you could precisely identify edges regardless of the workpiece color.

I was thinking it would be funny to have a variety of pens and to automatically color a page from a coloring book. A coloring book is make-work to begin with, so to have a robot do it is twice as pointless, sorta.

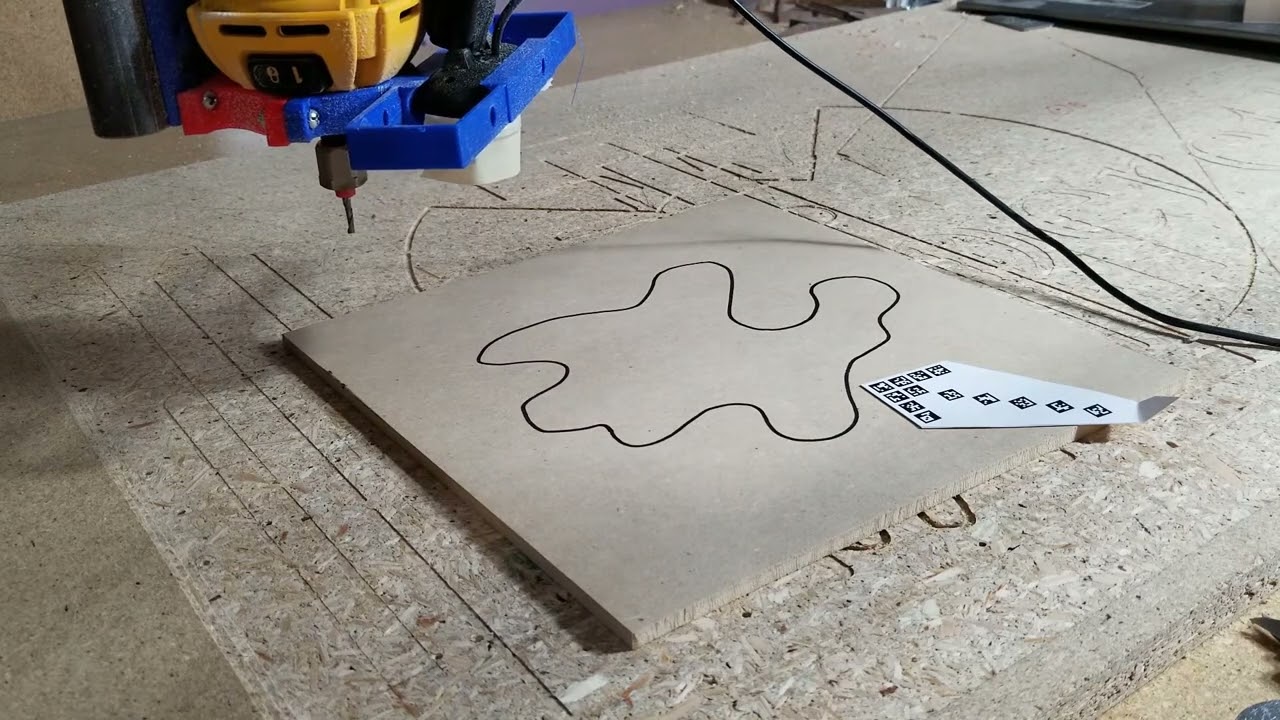

Tracing an existing piece is a good thought. It might take a bit of trial and error, but it should be reasonably precise. It could definitely be useful to cut a hole to fit an existing part, or cut a part to fit an existing hole, where going through a workflow from image to Inkscape to CAM process is a headache. And as an additional benefit, the cutout is naturally aligned to the workpiece, whereas with a separate image to Inkscape process, you have to take extra care to align the toolpath to the workpiece, if that matters.

Of course you could just break out the jigsaw, but then some hand-eye skill is required, and anyway that is so much less cool